Amazing new First-person view Machine Learning Dataset (Ego4d)

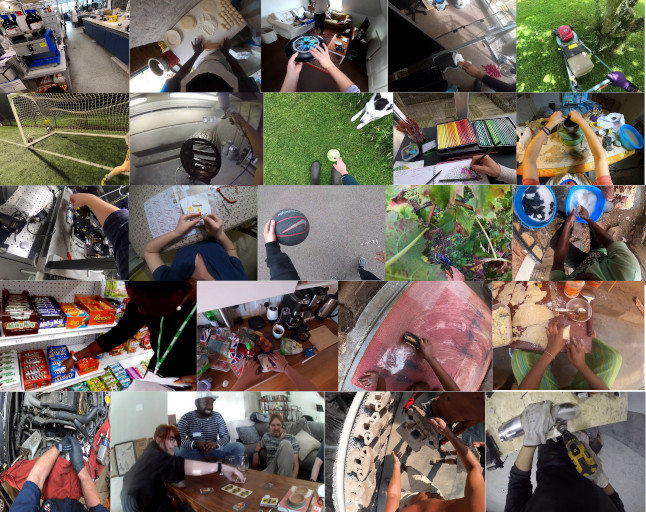

Found an amazing data set of over 4000 hours of first-person view video footage of people doing normal everyday tasks. EX: taking out the garbage, playing board games, bike riding, etcetera...

Found an amazing data set of over 4000 hours of first-person view video footage of people doing normal everyday tasks, totaling more than 5TB of data!. EX: taking out the garbage, playing board games, bike riding, etcetera...

Last updated 20220218, data set is now live update!

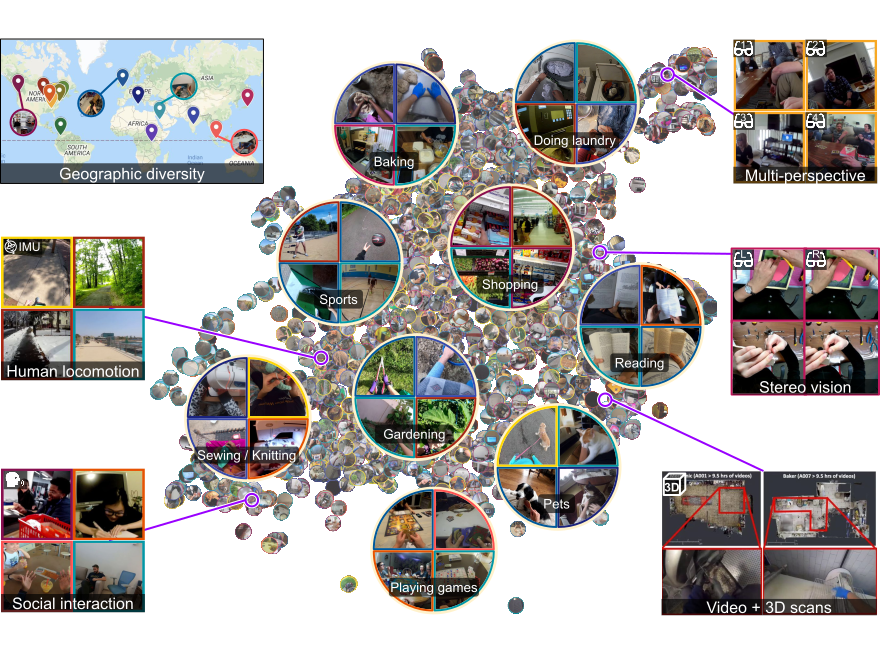

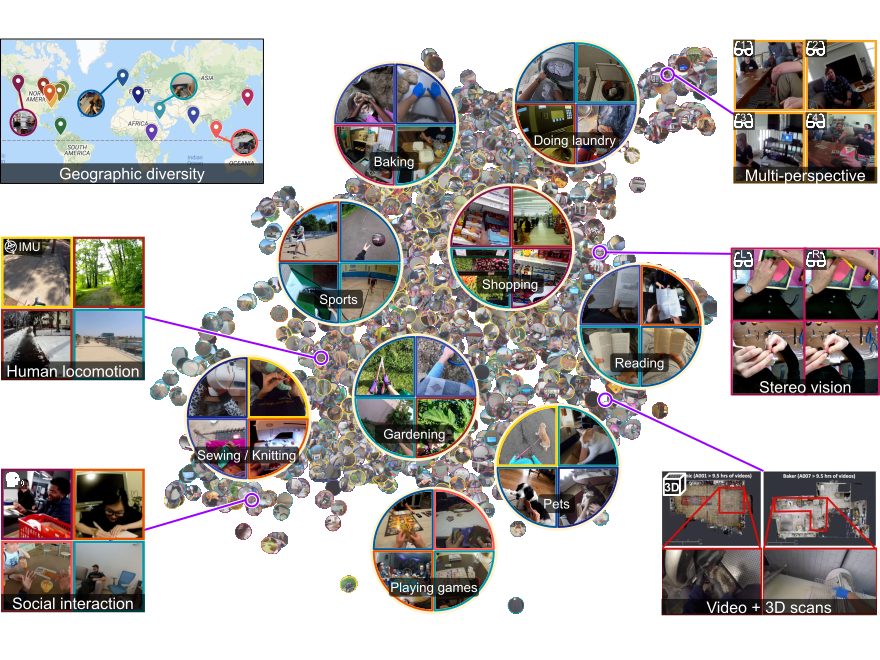

Egocentric 4D Perception (EGO4D)

They have an awesome latent space explorer

Link bellow is the live interactable view you see above

Okay, cool but WTF is it?

its a masive collection of every day life video from the point of view of peoples eye vantagepoint. which is how human see the world.

It has mutliple datasets:

Transcriptions of everything

Eye tracking

Object Segmentation

Reconstruction of 3d enviornments

Motion capture

Why is this important?

New datasets = new amazing algorthms to solve cool problems

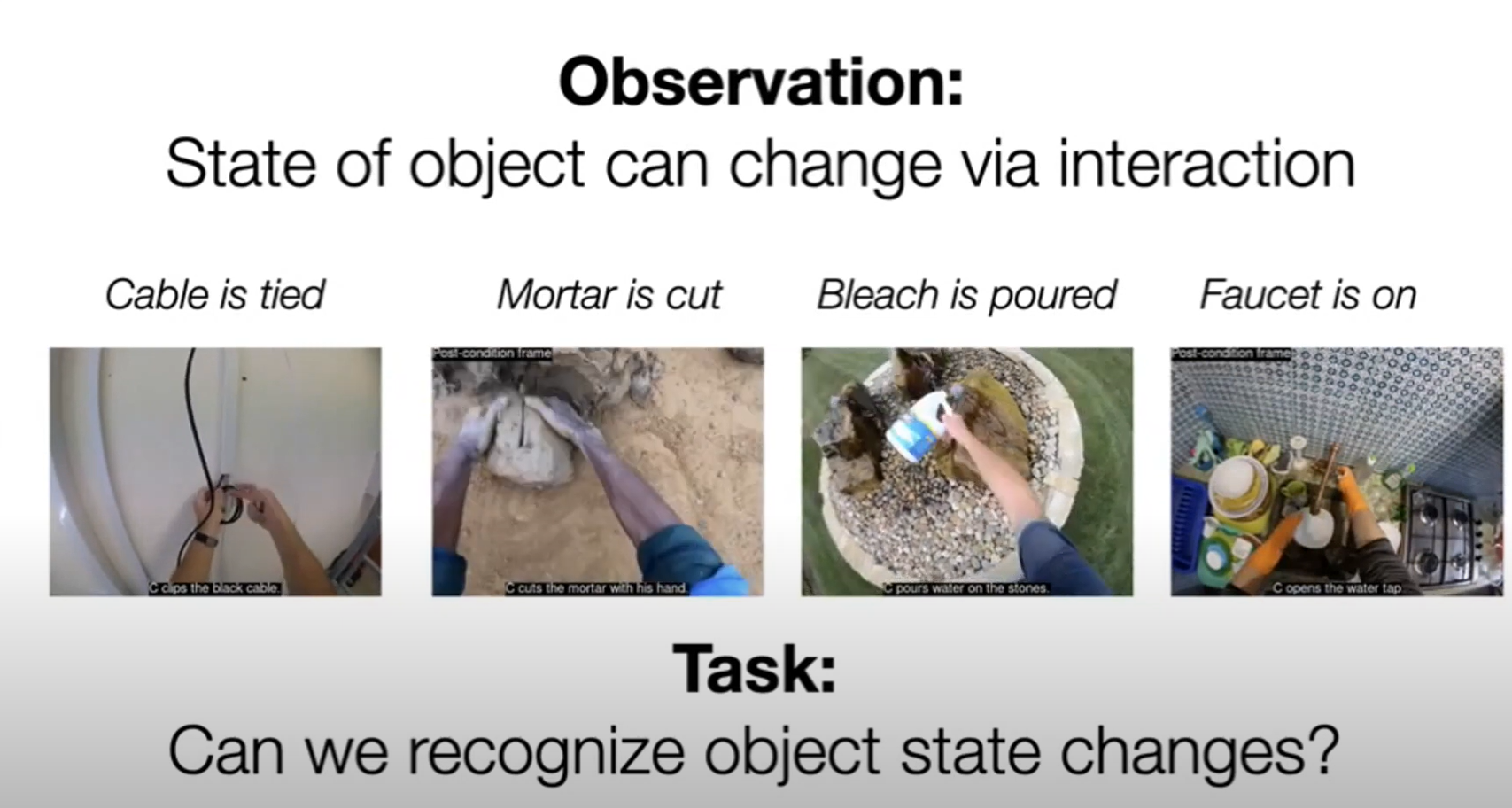

Problems like:

"Computer where did i leave my keys?"

Having a robot understand who is talking to who in a converstation.

and so much more!

Problems like these arnt really easly solvable without really good data. this i predict will be a really big generative source of quality Machine learning research to come. 😃

2 hour talk givin by the creators

Highlights

source: time stamp 2:19 https://drive.google.com/file/d/1oknfQIH9w1rXy6I1j5eUE6Cqh96UwZ4L/view

So where do I download

You cant yet, :/ its being released at the end of feb 2022, so your probliby reading it when its avialbe!

Update! data set is now live!

looks like it was released yesterday! nice! today is 20220218

LINK

https://ego4d-data.org/#download

For the nerd here is the research paper!

Direct pdf link

https://arxiv.org/pdf/2110.07058.pdf

Get involved!

Take away

I hope y'all ml researches make great use of this dataset!

I'm excited to see what comes next!

A great new class of dataset that unlocks awesome robotics an VR applications

thanks for reading! 😎

Author

by oran collins

github.com/wisehackermonkey